Scripted cloud factory

Building a Docker Swarm cluster with Terraform and Ansible

Nowadays, you are just a few clicks away from getting your very own (virtual) machine for a month for the price of a pizza. It can be anywhere in the world with a fixed IPv4 address and some data transfer in the price. The catch is that nowadays, one machine is barely enough. You need clusters, high availability, and if we are not careful, suddenly we talk about eight-ten pizzas already.

It's a whole different experience to handle just a couple of machines. When you spend hours picking the most fitting name for it, install and configure software with the utmost care and love. Sometimes you even remember to log in to upgrade them. Then comes the point when there are too many of them. You start to not care about their names anymore, just use the purpose of the machine and append a 01, 02, or 03 at the end of it.

The subject of our experiment will be a three-machine Docker Swarm cluster created with different scripts and configurations starting with the virtual machines, domain names, and software up until the applications running on it.

Three machines aren't the top of high availability for sure, but this method could be used with hundreds of machines as well. Those of you who are impatient enough can check out the result in the connecting Github repository. So let's jump right into it.

Infrastructure

For a start, we will pull a couple of machines out from thin air. Our charming assistant, the prominent representative of the Infrastructure as Code movement, Terraform, will help us with that. This tool supports many providers like AWS, GCP, but I will use DigitalOcean in the examples.

We will use Terraform to create the machines, put them into a project and a private network, and create a couple of DNS records pointing to them (for this to work, DigitalOcean should handle our domain). Let's look at a short extract from the configuration.

terraform/swarm01.tf

provider "digitalocean" {

version = "~> 1.18"

}

locals {

region = "fra1"

image = "ubuntu-20-04-x64"

size = "s-1vcpu-1gb"

}

data "digitalocean_ssh_key" "default" {

name = "name_of_the_ssh_key"

}

data "digitalocean_domain" "default" {

name = "example.com"

}

resource "digitalocean_vpc" "swarm01" {

name = "swarm01"

region = local.region

}

resource "digitalocean_droplet" "manager01" {

image = local.image

name = "manager01.swarm01.example.com"

region = local.region

size = local.size

private_networking = true

vpc_uuid = digitalocean_vpc.swarm01.id

ssh_keys = [

data.digitalocean_ssh_key.default.fingerprint

]

}

resource "digitalocean_record" "manager01" {

domain = data.digitalocean_domain.default.name

type = "A"

name = "manager01.swarm01"

value = digitalocean_droplet.manager01.ipv4_address

ttl = "3600"

}

The data blocks are things that already exist in the system, and we don't want to manage them with Terraform. It will rely on their existence, and we can refer to them from other parts of the configuration. The resource blocks will be created (or deleted). Sadly, we cannot reference the droplet's name from the FQDN of the DNS record because we already reference the droplet's IPv4 address in the DNS record's value, and Terraform doesn't really like circular references.

It could be a good idea to use lower TTL values for the DNS records at first, so we don't have to wait for the domains to update to the new IP address after we delete and recreate the whole system for some reason.

For this to work, we will need an environment variable named DIGITALOCEAN_ACCESS_TOKEN. If we have that, we can run the terraform init command to download some provider-related stuff and run the terraform apply to create everything. After some waiting, we will have three new machines.

Here is a quick tip on how you can avoid this dangerous token ending up in your shell history:

$ read -s DIGITALOCEAN_ACCESS_TOKEN

$ export DIGITALOCEAN_ACCESS_TOKEN

Softwares

No matter how much you want it, these machines won't turn into a Docker Swarm cluster by themselves. If we want to keep scaling up simple, we don't want to do this install and setup manually either.

Lucky for us that someone else already solved this problem. For example, we could use Ansible, which can set up machines based on YAML configuration files. Because we went with Ubuntu machines earlier, these configs will be a bit Debian/Ubuntu specific, but the tool itself supports many operating systems.

First, Ansible needs to know about the machines it works with. For this, it will need a hosts file:

ansible/hosts

[managers]

manager01.swarm01.example.com

[workers]

worker[01:02].swarm01.example.com

A big part of the configuration files consist of tasks, like apt, that unsurprisingly can be used to define required packages for the machine:

ansible/roles/docker/tasks/main.yml

- name: install prerequisit packages

apt:

name:

- apt-transport-https

- ca-certificates

- curl

- gnupg-agent

- software-properties-common

- python3-pip

update_cache: yes

But there is a module to manage Docker Swarm also. For example, here is a way we can initialize a manager node:

ansible/roles/swarm-manager/main.yml

- name: init docker swarm

docker_swarm:

advertise_addr: "{{ ansible_facts.eth1.ipv4.address }}"

state: present

A bit more complex example, joining a worker into the cluster:

ansible/roles/swarm-worker/main.yml

- name: get swarm info

docker_swarm_info:

delegate_to: "{{ groups.managers[0] }}"

register: swarm_info

- name: join swarm

docker_swarm:

state: join

join_token: "{{ swarm_info.swarm_facts.JoinTokens.Worker }}"

remote_addrs:

- "{{ hostvars[groups.managers[0]]['ansible_eth1']['ipv4']['address']}}"

advertise_addr: "{{ ansible_facts.eth1.ipv4.address }}"

We will need a join token from the manager node to set up a worker. Unfortunately, accessing the manager node's IPv4 address is less than elegant, but I couldn't find a nicer solution.

After running the ansible-playbook setup.yml command and waiting for a little bit, we will get a working Swarm cluster. At this point, it could be beneficial to secure the machines a little bit, like setting up firewall rules, installing and configuring fail2ban by writing more task YAML files.

Applications

Before installing any other application to the cluster, we need a reverse proxy. This entry point can decide which application should receive a request and forward it.

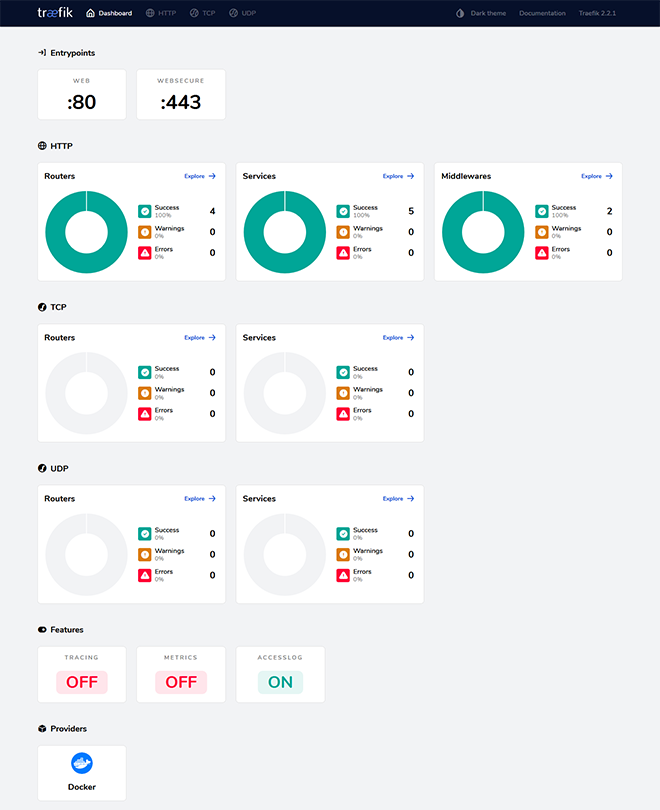

Traefik is an excellent fit for our system. It can be configured by Docker labels, and it can handle certificate generation with Let's Encrypt for those HTTPS connections.

How will this end up on Swarm? You will need a Compose file, and the rest is handled by an Ansible module.

ansible/roles/stacks/tasks/main.yml

- name: generate traefik stack

template:

src: traefik.yml.j2

dest: /etc/swarm/traefik.yml

- name: create traefik config directory

file:

path: /etc/traefik/acme

state: directory

- name: create traefik network

docker_network:

name: traefik-net

driver: overlay

- name: deploy traefik stack

docker_stack:

name: traefik

compose:

- /etc/swarm/traefik.yml

This will copy the Compose file to the machine, create a directory for storing Let's Encrypt certificates, create a Docker network between Traefik and other applications, and finally deploy the stack.

The Compose file mentioned above is not the simplest. We need to configure many Traefik-related things, and doing so as Docker labels is not so user-friendly.

ansible/roles/stacks/templates/traefik.yml.j2

version: "3.2"

services:

traefik:

image: traefik:v2.2.1

command:

- "--accesslog=true"

# Enable the API and the dashboard

- "--api=true"

- "--api.dashboard=true"

# We get our configuration from Docker Swarm

- "--providers.docker=true"

- "--providers.docker.exposedbydefault=false"

- "--providers.docker.swarmMode=true"

# Serving content on port 80 and 443

- "--entrypoints.web.address=:80"

- "--entrypoints.websecure.address=:443"

# Give us some free TLS certificates

- "--certificatesresolvers.myresolver.acme.email=something@example.com"

- "--certificatesresolvers.myresolver.acme.storage=/etc/traefik/acme/acme.json"

- "--certificatesresolvers.myresolver.acme.tlschallenge=true"

- "--certificatesresolvers.myresolver.acme.httpchallenge=true"

- "--certificatesresolvers.myresolver.acme.httpchallenge.entrypoint=web"

ports:

- target: 80

published: 80

protocol: tcp

mode: host

- target: 443

published: 443

protocol: tcp

mode: host

deploy:

# This row and the host mode in ports are required to get the proper

# request address in our access logs

mode: global

placement:

constraints:

- node.role == manager

labels:

- "traefik.enable=true"

- "traefik.docker.network=traefik-net"

# Basic auth middleware for the Dashboard

- "traefik.http.middlewares.test-auth.basicauth.users=test:$$2y$$05$$ooiVn4yz0coSR28J9O5wNuvGHyZPCAaRYSeDXDdKCkbbtKO31LJ1K"

- "traefik.http.middlewares.test-auth.basicauth.removeheader=true"

# Redirect middleware from http to https

- "traefik.http.middlewares.test-redirectscheme.redirectscheme.scheme=https"

- "traefik.http.middlewares.test-redirectscheme.redirectscheme.permanent=true"

# http endpoint is redirect only

- "traefik.http.routers.api.rule=Host(`traefik.swarm01.example.com`)"

- "traefik.http.routers.api.middlewares=test-redirectscheme"

- "traefik.http.routers.api.entrypoints=web"

# https endpoint serves the API and Dashboard

- "traefik.http.routers.api-secure.rule=Host(`traefik.swarm01.example.com`)"

- "traefik.http.routers.api-secure.service=api@internal"

- "traefik.http.routers.api-secure.middlewares=test-auth"

- "traefik.http.routers.api-secure.entrypoints=websecure"

- "traefik.http.routers.api-secure.tls=true"

- "traefik.http.routers.api-secure.tls.certresolver=myresolver"

- "traefik.http.services.dummy-svc.loadbalancer.server.port=9999"

volumes:

# Certificates will be saved to the host machine

- /etc/traefik/acme:/etc/traefik/acme

- /var/run/docker.sock:/var/run/docker.sock

networks:

- traefik-net

networks:

traefik-net:

external:

name: traefik-net

We used a template instead of a plain old file for this. It doesn't have any advantage for now, but we will get to that later why it could be useful to keep Compose files as templates.

After running the playbook (ansible-playbook deploy.yml) we will have a working Traefik Dashboard on https://traefik.swarm01.example.com/.

Deploying our own applications could be done similarly. We need to build a Docker image from it, upload it to a registry, write a Compose file with the proper Traefik labels and deploy it with Ansible.

For the sake of an example, let's check out how we can configure a whoami application. The application itself doesn't do much; it displays information about the HTTP request (who served it, what kind of headers it got). It's a perfect choice for Swarm testing. You can see from the output that different requests are handled by different worker machines.

ansible/roles/stacks/templates/whoami.yml.j2

version: "3.2"

services:

whoami:

image: containous/whoami:v1.5.0

deploy:

replicas: 3

update_config:

parallelism: 1

delay: 10s

labels:

- "traefik.enable=true"

- "traefik.docker.network=traefik-net"

# Redirect middleware from http to https

- "traefik.http.middlewares.test-redirectscheme.redirectscheme.scheme=https"

- "traefik.http.middlewares.test-redirectscheme.redirectscheme.permanent=true"

# http endpoint is redirect only

- "traefik.http.routers.whoami.rule=Host(`whoami.swarm01.example.com`)"

- "traefik.http.routers.whoami.middlewares=test-redirectscheme"

- "traefik.http.routers.whoami.entrypoints=web"

# https endpoint serves the application

- "traefik.http.routers.whoami-secure.rule=Host(`whoami.swarm01.example.com`)"

- "traefik.http.routers.whoami-secure.entrypoints=websecure"

- "traefik.http.routers.whoami-secure.service=whoami_service"

- "traefik.http.routers.whoami-secure.tls=true"

- "traefik.http.routers.whoami-secure.tls.certresolver=myresolver"

- "traefik.http.services.whoami_service.loadbalancer.server.port=80"

networks:

- traefik-net

environment:

- "SECRET={{ secret }}"

networks:

traefik-net:

external:

name: traefik-net

The labels are almost the same as for the Traefik configuration. The environment part has a little surprise, some templating fun mentioned earlier. We can store sensitive data in encrypted Ansible Vault:

ansible/roles/stacks/vars/main.yml

secret: !vault |

$ANSIBLE_VAULT;1.1;AES256

30326530326164646161636239316436333339393835633164396535373739323933333432333937

3536623538346139333639626263386263353333656565660a663763616335356561613366323964

64633962643139656362356164373633616165333130623034383561383165396137623136653734

6334316262626336660a343739343036323533626330633365643439623233663234396264376639

3333

During running the playbook, Ansible will ask for the Vault password (if it was run with the --ask-vault-pass flag), and the file will be deployed with a properly substituted value.

Monitoring

The simplest way is to ssh into the manager machine and run the docker commands we want to run, but the docker command could do this for us as well:

$ docker context create swarm01 --docker "host=ssh://root@manager01.swarm01.example.com"

$ docker context use swarm01

$ docker service ls

ID NAME MODE REPLICAS IMAGE PORTS

hnjenj5royp1 traefik_traefik global 1/1 traefik:v2.2.1

b8gwkvtih4z6 whoami_whoami replicated 3/3 containous/whoami:v1.5.0

$ docker service logs -f traefik_traefik

[...]

For a more comfortable monitoring experience, we can fire up an Elastic Stack (with the help of Terraform and Ansible, of course) and send all the logs from our machines there.

Scaling up

Let's start with the easiest. If we don't have enough worker capacity, we just need another droplet block in our Terraform config, a bit of change in our Ansible hosts file, and our new worker is ready to handle the traffic. Of course, we can increase the size of our worker droplet as well (more CPU core, more memory).

If we are pushing the limits of our manager node, that's a bit more problematic. We can easily increase the size of the droplet until we hit the biggest one... but after that... Running multiple manager nodes is certainly possible, but its goal is more like high availability than increasing performance and causes other problems with this setup as well (like we cannot store certificates simply on the host machine in files). Another way to go is just simply creating a swarm02 cluster and moving some of the applications there.

Another scaling problem could be the human factor. When you are not the only one modifying the system. At that point, it wouldn't be advisable that everyone has dangerous API tokens on their machines and running terraform apply locally. Instead, we could outsource this to a build server, and modifying the system could be done through merge requests. For this, Terraform gives us the terraform plan command that tries to display to us what would be done if we run terraform apply. Similarly, Ansible has the --check flag that outputs the modifications it would make.

Summary

With Terraform and Ansible, we could create and manage hundreds of machines (and other services) on different cloud providers. Meanwhile, Docker Swarm and Traefik can handle our applications and other tasks (like scaling and certificates). And it's even better that they do this with good old plain text files that we can put in a version control system.